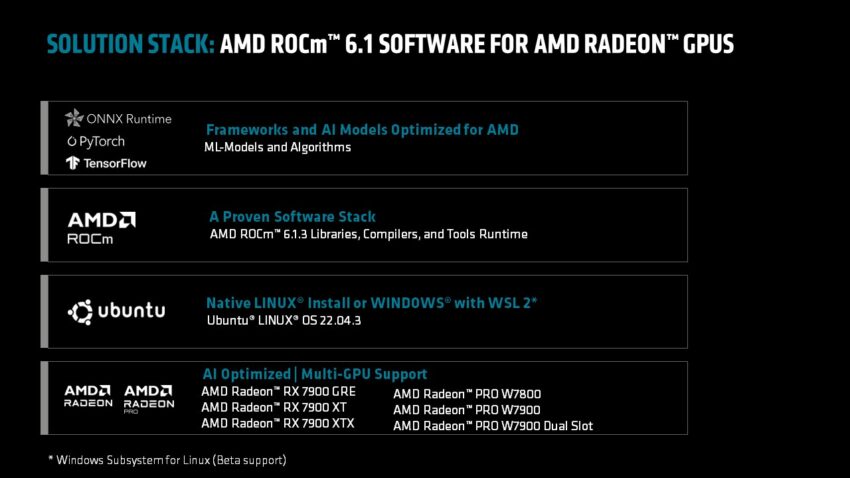

Radeon™ Software for Linux® version 24.10.3, including ROCm 6.1.3, brings several enhancements:

- Introduces TensorFlow support via ROCm on select Radeon™ GPUs.

- Officially supports the Radeon™ PRO W7900 Dual-Slot.

- Offers official support for multiple Radeon GPUs: dual RX 7900XTX & W7900, as well as dual and quad W7900 Dual-Slot configurations.

- Provides beta support for ROCm via Windows Subsystem for Linux (WSL) on Windows platforms. For detailed instructions, refer to the Radeon Getting Started Guide.

- Note: The latest numpy v2.0 Python module is not compatible with the torch wheels in this release. To resolve, downgrade to an earlier version, for instance:

pip3 install numpy==1.26.4.

Here are the rephrased known issues for Radeon™ Software:

- Radeon GPUs may not adequately support extensive simultaneous parallel workloads. It is advised not to exceed two concurrent compute workloads, especially when operating alongside a graphical environment (e.g., Linux desktop).

- Users may encounter intermittent gpureset errors when using the Automatic 1111 webUI with IOMMU enabled. Please refer to https://community.amd.com/t5/knowledge-base/tkb-p/amd-rocm-tkb for suggested solutions.

- The ROCm debugger exhibits instability and is not fully supported in this version.

- It is not recommended to enable “Automatic suspend state when idle” when running AI workloads.

- Connecting monitors to multiple GPUs on an Intel Sapphire Rapids powered system may result in display issues. To mitigate this, it is recommended to connect all monitors to a single GPU.

Leave a Reply